Google's Recommended Guide for Search Engine Optimization (SEO)

Who is this guide for?

If you own, manage, monetize, or promote online content via Google Search, this guide is meant for you. Whether you are the owner of a growing business, a webmaster of several sites, an SEO specialist at an agency, or a DIY SEO enthusiast, this overview of SEO basics according to our best practices will be invaluable.

While this guide won’t reveal “secrets” to automatically rank first (sorry!), following these best practices will make it easier for search engines to crawl, index, and understand your content.

Search Engine Optimization (SEO) is often about making small, incremental modifications to parts of your website. Individually, these changes might seem minor, but collectively, they significantly impact your site’s user experience and performance in organic search results.

Optimization should always be geared toward making the user experience better. One of those “users” is a search engine, which helps others discover your content. SEO is about helping search engines understand and present your content effectively.

Getting Started

Glossary

Here is a short glossary of important terms used in this guide:

- Index: Google stores all web pages it knows about in its index. To “index” a page is to fetch, read, and add it to this database.

- Crawl: The process of looking for new or updated web pages. Google discoveries URLs by following links, reading sitemaps, and other means.

- Crawler: Automated software (like Googlebot) that fetches pages from the web.

- Googlebot: The generic name of Google’s crawler, which constantly explores the web.

- SEO: Search Engine Optimization is the process of making your site better for search engines, or the title of a person who performs this task.

Are you on Google?

To determine if its in Google’s index, perform a site: search for your URL (e.g., site:yourdomain.com). If results appear, you’re indexed.

If your site is missing, it might be because:

- It’s not well-connected by links from other sites.

- It’s a brand-new site and hasn’t been crawled yet.

- The site design makes it difficult for Google to crawl effectively.

- Google encountered an error while crawling.

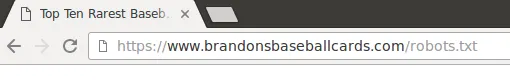

- Your policy (robots.txt) blocks Google.

How do I get my site on Google?

Inclusion in Google’s search results is free. You don’t even need to submit your site; Google is fully automated and constantly explores the web for new content.

However, Google Search Console provides tools to help you submit content and monitor performance. If critical issues arise, Search Console can send you alerts. Sign up for Search Console.

Ask yourself these basic questions:

- Is my website showing up on Google?

- Do I serve high-quality content?

- Is my local business showing up on Google?

- Is my content fast and easy to access on all devices?

- Is my website secure?

For more information, visit Google Webmasters. You can also download a printable checklist at Webmaster Checklist.

Do you need an SEO expert?

While this guide will help you optimize your site, you may consider hiring a professional for an audit. Hiring an SEO is a big decision; ensure you research the advantages and potential risks. Services often include:

- Content and structure reviews.

- Technical advice (hosting, redirects, error pages).

- Content development and keyword research.

- SEO training.

We recommend learning how search engines work by reading:

- How Google crawls, indexes, and serves the web

- Google Webmaster Guidelines

- How to hire an SEO (Video)

Help Google Find Your Content

The best way to ensure Google finds your site is to submit a sitemap. This file tells search engines about new or changed pages. Google also finds pages through links from other sites.

Tell Google Which Pages Shouldn’t Be Crawled

[!TIP] Best Practices: Use

robots.txtfor non-sensitive information to block unwanted crawling.

A robots.txt file tells search engines if they can access parts of your site. It must be named robots.txt and placed in the root directory.

[!CAUTION] Avoid:

- Don’t let internal search results pages be crawled.

- Avoid crawling URLs created by proxy services.

For sensitive information, use more secure methods. robots.txt is not a security measure. Use the noindex tag for pages you don’t want in search results, or use password protection for truly sensitive data.

Help Google (and users) Understand Your Content

Let Google see your page the same way a user does

For optimal indexing, always allow Googlebot access to your JavaScript, CSS, and image files. Blocking these assets via robots.txt harms your site’s ranking.

Create unique, accurate page titles

The <title> tag tells users and search engines the topic of a page. Place it within the <head> element and ensure every page has a unique title.

_1GNAAk.webp)

[!TIP] Best Practices:

- Accurately describe the page’s content.

- Choose naturally reading titles.

[!WARNING] Avoid:

- Titles unrelated to the content.

- Default or vague titles like “Untitled”.

- Extremely lengthy titles or keyword stuffing.

Use the “description” meta tag

The description meta tag summarizes the page. Google might use these as snippets in search results.

_Z289HH5.webp)

_ZksMNh.webp)

[!TIP] Best Practices:

- Summarize content accurately.

- Provide information relevant enough for users to determine the page’s utility.

- Use unique descriptions for every page.

Use heading tags to emphasize important text

Semantic headings (h1 through h6) help create a hierarchical structure, making it easier for users to navigate.

[!WARNING] Avoid:

- Placing text in headings that doesn’t define structure.

- Using headings purely for styling.

- Excessive use of heading tags.

Add structured data markup

Structured data code helps search engines understand your content to display “rich results” (e.g., product prices, star ratings, opening hours).

_Zb3oYe.webp)

_2os8iA.webp)

You can mark up various entities:

- Products and prices.

- Business location and opening hours.

- Recipes and videos.

- Company logos.